Eps 1: Dangers of Deepfakes in Disinformation

— Dangers of Deepfakes For Counterdisinformation

| Host image: | StyleGAN neural net |

|---|---|

| Content creation: | GPT-3.5, |

Host

Franklin Steward

Podcast Content

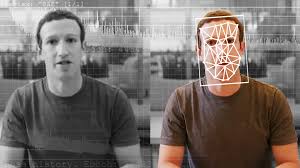

In deepfakes, individuals teach computers to imitate real humans in order to create videos that look real. Deepfake technology allows anyone with a computer and an Internet connection to produce photos and videos with a lifelike appearance, in which people are said and done things that they are not really saying or doing. One of the more problematic uses for deepfake technology is when the image of a person, usually a woman, is manipulated and placed in a sexually explicit video, making it seem like the person being targeted is engaging in the sexual activity, said Mark Berkman, executive director of The Organization for Social Media Safety, a nonprofit organization that works to make social media safer through advocacy and education.

Deepfakes can be used to exacerbate social divisions, using manipulated videos and audio to spread misinformation about communities. Deepfakes are most suitable to spread disinformation in cases where a video record is usually effective.

Even more insidiously, just the possibility that a video might be deepfake could arouse confusion and promote political deception, whether or not the deepfake technique was actually used. The reality of deepfake videos, images, and audio is far more dangerous than phony videos featuring famous Hollywood figures. As deepfakes have gained wider exposure, public officials caught on camera may exploit the liars dividend by saying that the genuine video is deepfake.

On another note, some scholars argue that deepfakes and other forms of disinformation fuel the so-called liars dividend problem . Academics less worried about deepfakes believe that deepfake technologies are as destructive as other forms of online misinformation. Those more worried about the technologys potential for abuse examine how deepfakes directly affect consumers actions and attitudes; whereas those who are less worried examine how the technology feeds into broader spaces of misinformation.

Deepfake technologies are also quite relevant for the Lazo studies, says Lazo, but there needs to be more study of different types of misinformation, how it is propagated, and its psychological effects on media consumers.

A recent study found that deepfake videos are much more likely to influence consumers political attitudes than other types of misinformation online. While the threat of deepfake videos having significant political effects has been much discussed over the past few years, so far, the political effects of this technology are limited. Deepfake harm has certainly been seen in the realm of pornography--where individuals have had their image used without consent--but nothing has been as bad as people really fear, namely, incriminating, hyperrealistic deepfakes of presidential candidates saying things that sway key voting centers, said Henry Ajder, a specialist on synthetic media and artificial intelligence.

Beyond the dangers and damage caused by deepfake materials themselves -- financial losses, damaged reputations, trust issues, and so forth -- there is a growing worry about the way that false information alters peoples perceptions of reality.

As technology becomes available to every computer user, more researchers are paying attention to detecting deepfakes, and looking at ways to regulate them. Numerous corporate and academic initiatives are now being undertaken to build technologies that can detect deepfakes.

Technology companies like Facebook and Google are spending resources on detecting deepfakes, with efforts like Facebooks deepfake detection challenge and Googles recent ban on deepfakes. Many of todays deepfakes are created using AI, like machine learning or deep learning. The underlying technology making deepfakes possible is a branch of deep learning known as generative adversarial networks .

While only complex experts are capable of creating the most realistic deepfakes, everyone with an iPhone and a single photo can now use free apps to make basic deepfakes. Now, there are apps fordeepfake creatorA anddeepfakeA, and other software for creating deepfakes, which makes creating a deepfake as easy as uploading an image or video file.

Deepfakes -- a portmanteau of deeplearning and fake -- are videos, photos, or audio recordings altered in ways to make fake content look real. In simplified terms, a deepfake is a video faked using deep learning, says Paul Barrett, an associate professor of law at New York University.

Because deepfakes utilize artificial intelligence techniques to layer fake information over existing content, they are hard for companies and individuals to detect and fix. The fact that Deepfakes may be both persuasive and hard to identify raises concerns about how the technology could undermine a courts duty of truth-seeking.

It is far harder to sway public discourse through deepfakes or manipulating media content, particularly when creating deepfakes about people who are still alive and can respond immediately. Deepfakes can be used to disseminate perverse misinformation at a rapid pace and on a large scale, eroding confidence in institutions. Escalating disinformation campaigns In addition to sound, video, and images, unnoticed textual deepfakes can be used to sway audiences, instill fear and suspicion, and erode trust.

Deepfakes can also assist public figures to conceal their immoral actions under the cover of deepfakes and fake news, calling their actually damaging actions fake, which is also known as the Liars Dividend . While artificial intelligence may be used to create deepfakes, it may also be used to spot them, wrote Brookingss Villasenor in February. Deepfakes--derived from deep learning and fakes --are media items that are faked or manipulated using AI; more commonly, existing images or videos are combined or overlaid on top of source material using machine learning techniques.